Chat with Datasets

Donovan goes beyond traditional search interfaces by introducing a dynamic and conversational way to interact with datasets.

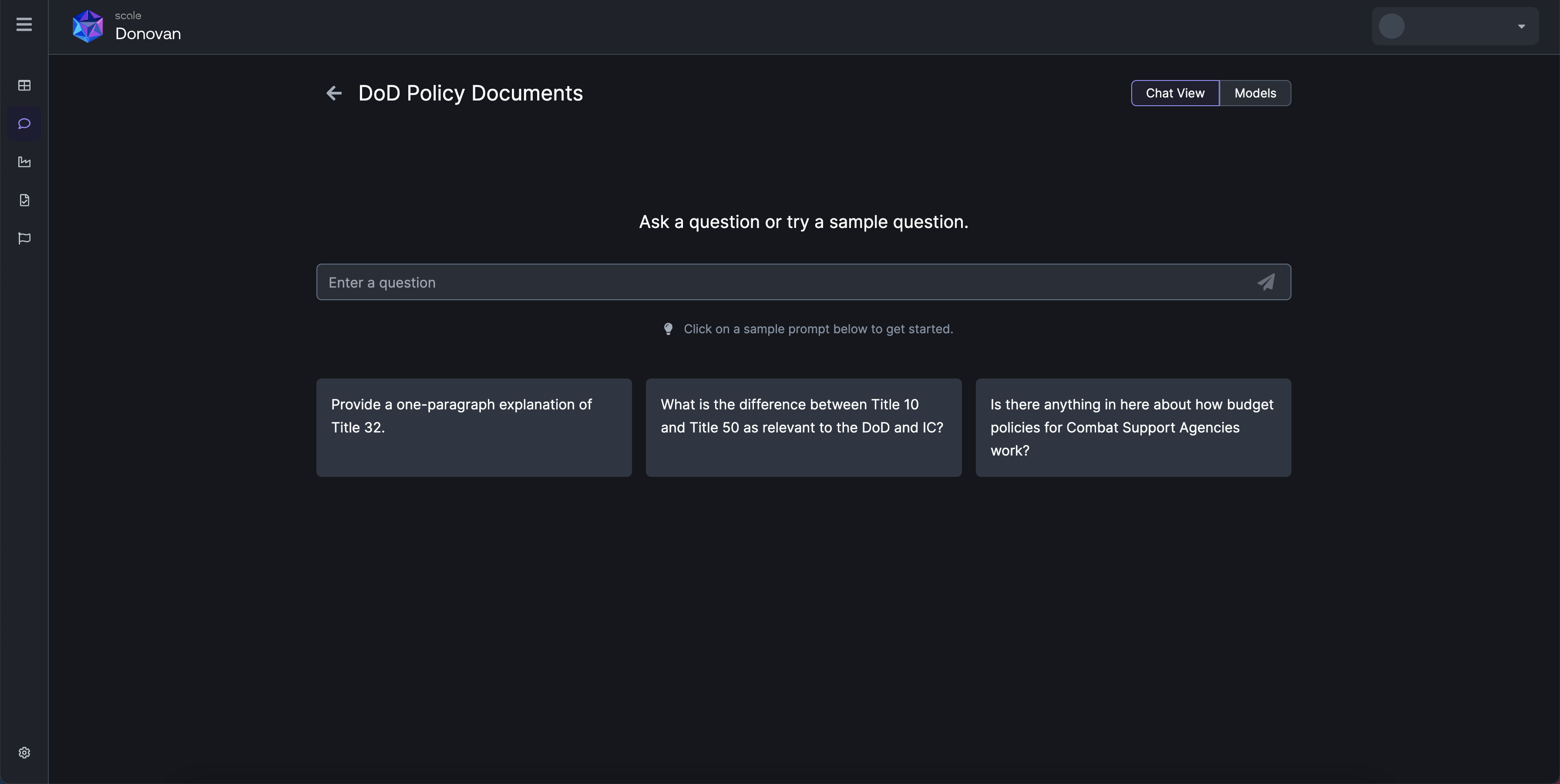

Asking Questions and Exploring Pre-written Queries

With Donovan's chat interface, you have the freedom to ask questions just like you would in a conversation. Enter your queries in plain language, and Donovan's AI engine will swiftly identify the most relevant information from the datasets to provide you with accurate answers.

To streamline your interaction further, Donovan offers a selection of pre-written questions right below the chat box. These questions cover a wide range of topics, making it easy for you to quickly access critical information without having to formulate your queries from scratch.

Select from pre-generated questions.

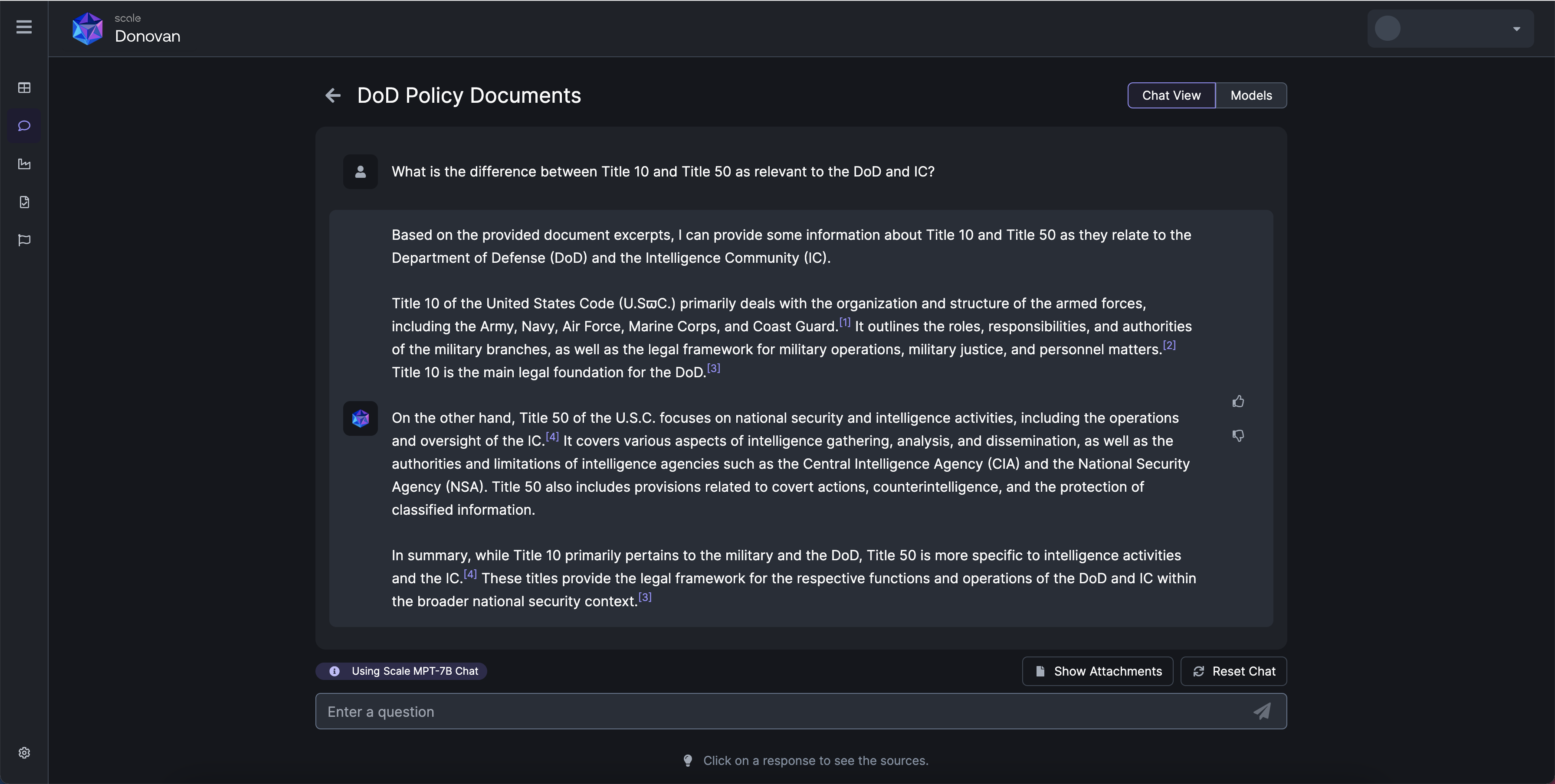

Awareness of the Model in Use

Transparency is key to ensuring confidence in the insights you receive. Donovan's chat interface displays the specific model you are interacting with. This empowers you to understand the context of the responses you receive and make informed decisions based on the AI model's capabilities.

The model that is being used will be displayed in the bottom left corner. Here, we're using Scale MPT-7B.

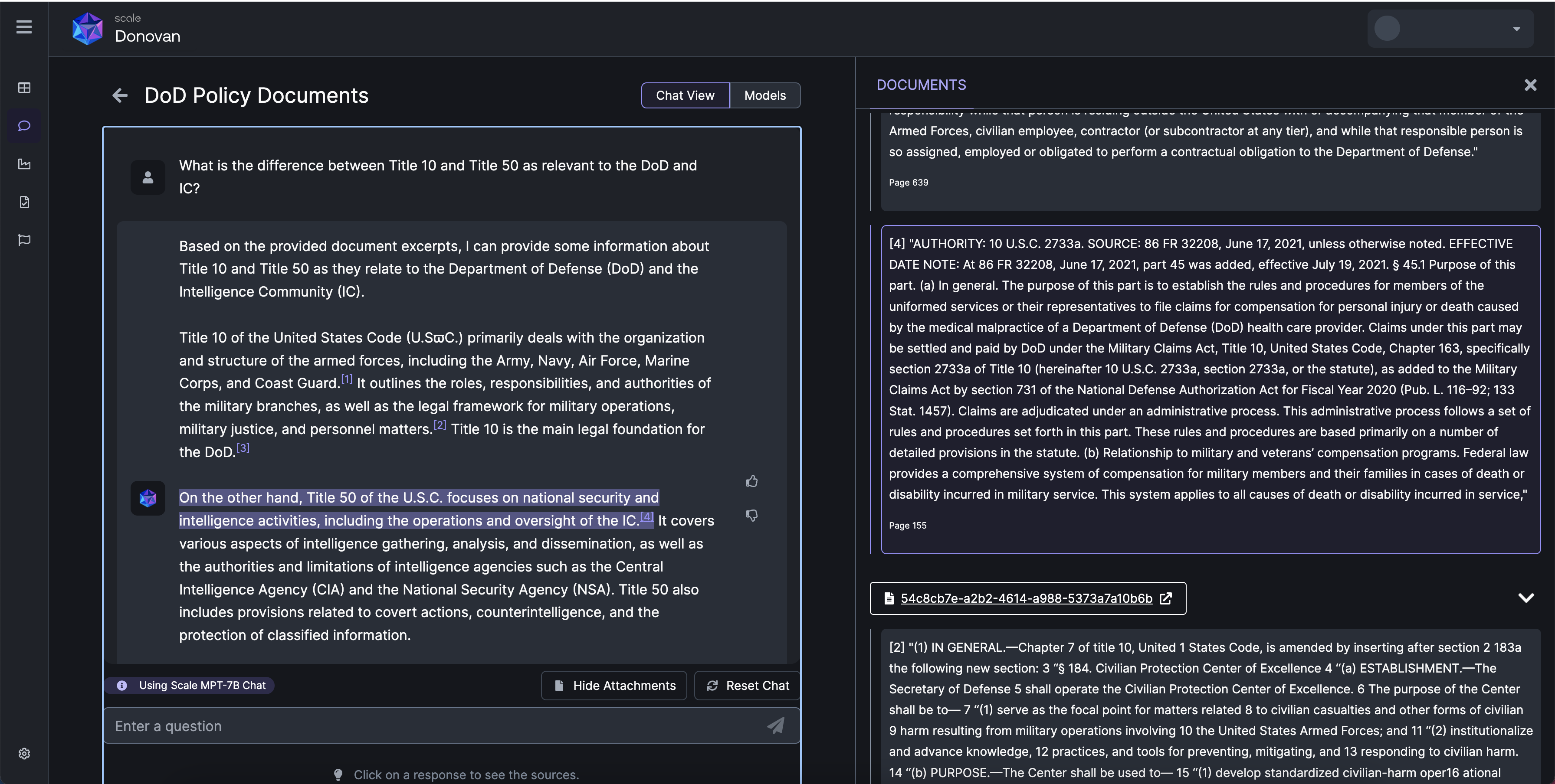

Citations for Enhanced Credibility

Donovan takes transparency to the next level by providing citations for the answers it generates. When the AI model responds to your query using information from any documents, it highlights the source material. This allows you to verify the information directly from the original documents, enhancing the credibility and reliability of the insights you gather.

Click on the citations or "Show Attachments" to see the documents used to product the answers.

Tips and Tricks - "Prompt Engineering"

Here are some general guidelines to maximize the information value of your queries, and to reduce the likelihood of generating overly vague responses, hallucinations or other erroneous information.

Structuring Queries

- Ensure clarity and specificity. Precise language in prompts tends to produce useful outputs. Vague prompts might lead to irrelevant or inaccurate results.

- Provide context. Context helps set the stage for the response you're looking for. Include relevant information or specify the desired perspective to receive a more focused answer.

- Consider the approach. The approach used, whether zero-shot, few-shot, persona-based or progressive prompting, will impact the quality and relevance of the response. More advanced methods, like persona-based and progressive prompting, require more investment but can produce higher quality results. For more detail on these approaches:

- Zero-shot prompting: No examples are provided, the model generates a response based only on its general knowledge and training. This approach is quick but often unreliable.

- Few-shot prompting: A small set of examples are provided to guide the model's response. This provides more context than zero-shot but risks overfitting if examples are too similar.

- Persona-based prompting: The model is asked to respond as if it has a particular persona or role. This helps frame the appropriate content, style and perspective but the model still has limited understanding of complex roles.

- Progressive prompting: Additional context or feedback is provided incrementally to iteratively improve the model's response. This enables explainability and tailored responses but requires significant time and expertise to implement.

- Tip: Progressive prompting can be used in conjunction with a Zero-shot approach simply by making an explicit statement like "let's think step by step" at the end of your query. This can help to avoid many logical or mathematical errors in a model's initial response, as well as provide explainability and transparency to let an operator modify their approach, or adjust course in subsequent queries. Give it a try!

Examples

Here are two examples of good prompt engineering, with example queries in quotation marks and each with an explanatory sub-bullet:

- "Act as an experienced military legal officer. Draft a memorandum for your commanding officer regarding the appropriate use of generative AI technology within the squadron. Include relevant regulations and policies to consider as well as recommendations for oversight and accountability."

- This prompt provides context (the role and audience), specifies the requested product (a memo with recommendations), and includes mandatory elements (regulations, policies, oversight recommendations) to guide the response. The persona helps frame the appropriate style and content.

- "You are an AI safety researcher. Explain three of the biggest risks associated with deploying generative AI for military operations and how those risks could be mitigated."

- This prompt asks for an explanation of specific risks (three biggest risks) and mitigations from an authoritative perspective (AI safety researcher). The open-ended question allows for a variety of relevant responses but the persona and risk/mitigation framework provide useful guidance.

OTOH, here are two examples of bad prompt engineering:

- "What are some ways AI can be used in the military? Discuss."

- This prompt is too vague. It does not specify a requested product, perspective or mandatory elements. The model has too much latitude to provide an unfocused or irrelevant response.

- "You are a squadron commander. Generate an operations order for your squadron to deploy next week."

- This prompt assumes the model can appropriately take on the role of a squadron commander and generate a realistic operations order. The model does not have a true understanding of military operations or the commander's responsibilities, so its response would likely be inaccurate or nonsensical (unless it were referencing a corpus of related documents, in which case some more explicit references would still be helpful). The prompt also does not provide any context about the deployment scenario.

Ready to experience the future of information interaction? Let's start a conversation with your datasets and elevate your decision-making.

Updated over 1 year ago